Train your own AI models from scratch

Skip the guesswork and accelerate your model training jobs with our field-tested recipes. Each recipe comes with proven architectures, optimized hyper-parameters, and validated training strategies that have already delivered results.

Cost Effective

Save inference cost by deploying small, optimised models that are purpose built from scratch for your use-case

Accurate

Surpass fine-tuned and general purpose models in accuracy by training a model for your specific task

Fast

Smaller, optimised models run magnitutes faster than larger general purpose models

It all starts with data

It all starts with data

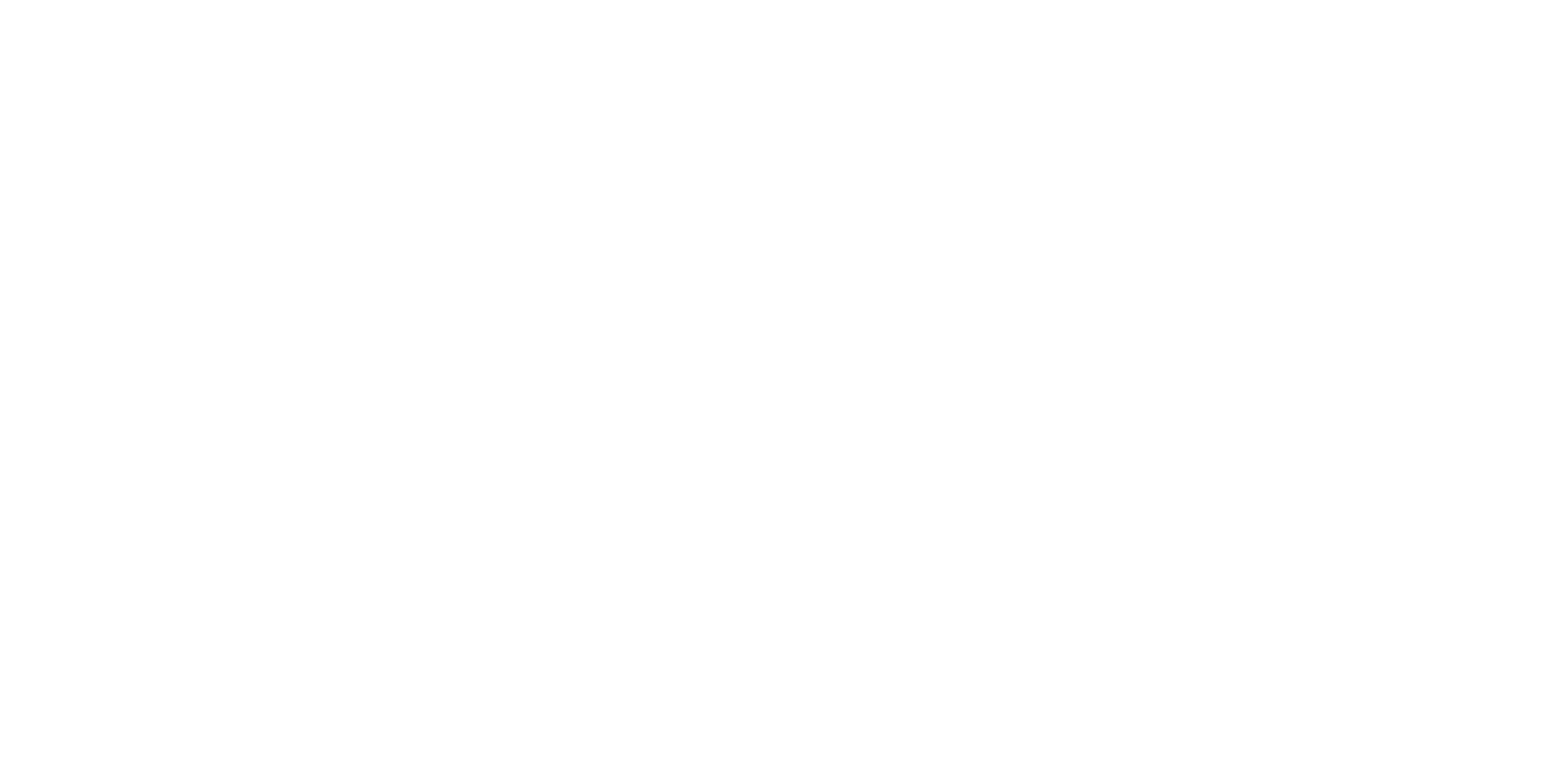

First step: Training Data

Just like fine food requires high quality ingridients, training a model from scratch requires high quality data. The Neuralfinity platform allows you to bring your own data, but we also provide you with a michelin-star quality dataset for your model to learn anything, from factual knowledge to writing styles.

Choose your architecture

Choose your architecture

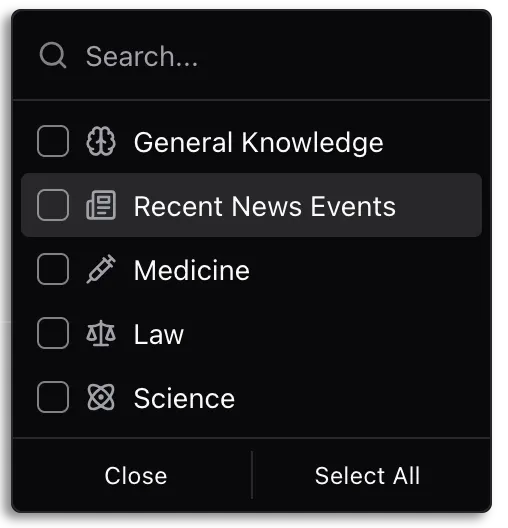

Second Step: Model Architecture

We support a number of open Architectures, such as Llama and GPT, as well as our in-house developed NLM series architecture which features a unique long-context ability that is only limited by available memory at inference time.

Automated model training - anywhere!

Automated model training - anywhere!

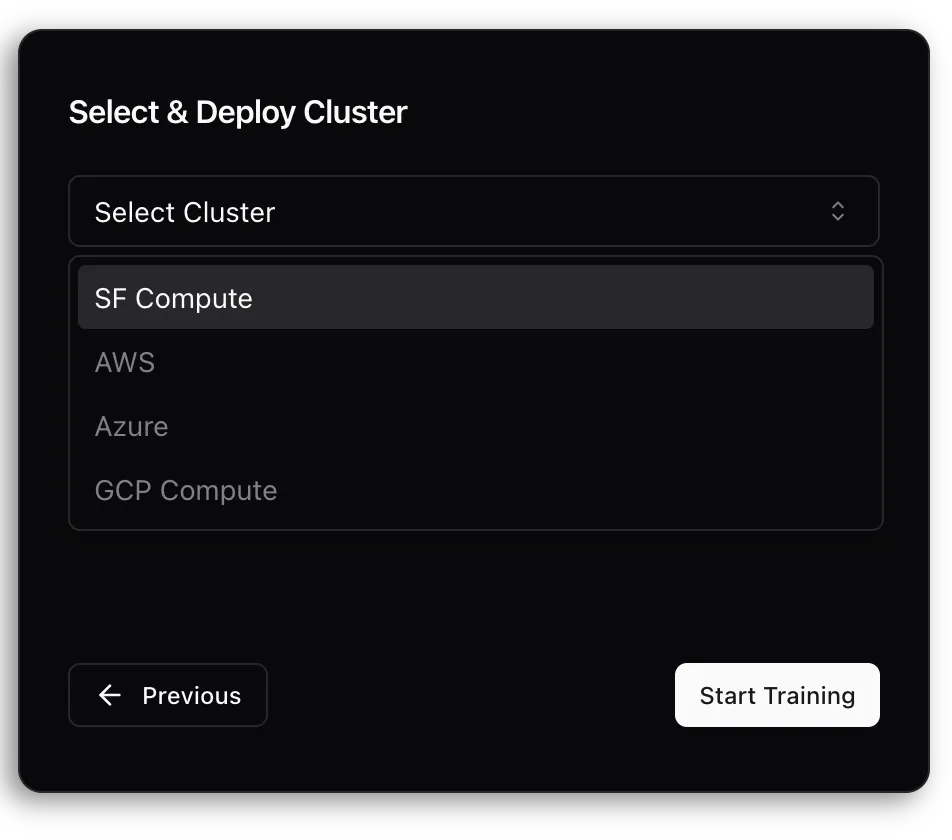

Third Step: Compute Orchestration

The Neuralfinity platform automatically specs a cluster for training your model, deploys it to a range of support cloud providers (including on-premise) and manages the training process for you. You can monitor the training process in real-time while we take care of checkpointing, dealing with potential hardware failures and more.

How does it perform?

How does it perform?

Last Step: Evaluate

A model built for your task needs to perform well for your use-case. Our industry leading evaluation tools let you specify exactly how performance is measured and metrics you want to optimise for. We even let you set a baseline through open and commerical models you might be currently using.